Have you ever looked under the hood of a poor performing website? It’s terrifying. There’s code spewing everywhere. Stylesheets barely connect. A paper clip is somehow holding together the header. The bandwidth is running low. Who knows the last time the redirects were audited. And the HTTP requests! So, so many HTTP requests. Don’t even get us started on the HTTP requests.

Actually, on second thought, get us started. HTTP requests are a big deal in the world of web optimization, especially now that speed has become so vital to visibility. Making sure your requests are running smoothly is no longer a choice; it’s a necessity.

So let’s take a closer look at how to reduce your HTTP requests and turn your site into a hot rod by giving it a request tune-up. Because if you’ve ever looked under the hood of a well-maintained, high-performing website, it’s a thing of beauty. Not to mention, it runs like an SEO champ.

What is an HTTP Request?

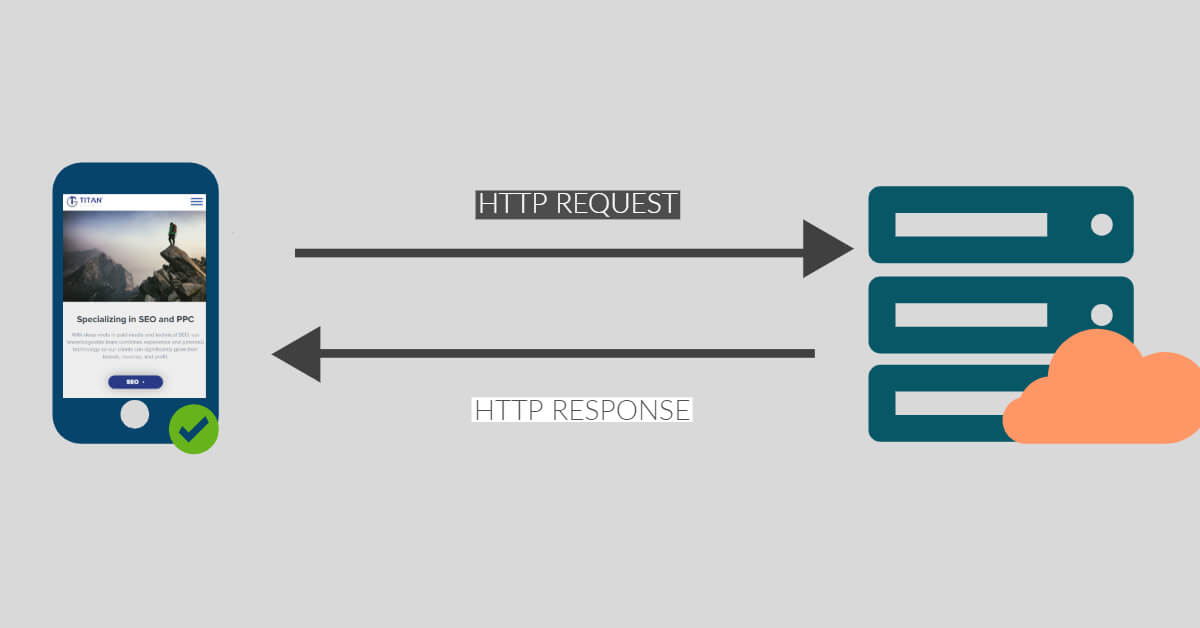

An HTTP Request is a message sent from a client to a server requesting information using the hypertext text transfer protocol (HTTP). It is the method by which a web browser (client) receives the files necessary to display a web page. These files include images, text, CSS, JavaScript, and the like.

Each time you visit a web page here’s what goes down:

- Your browser says hi to the page’s hosting server and asks if it will send over a file containing content associated with the page, like a video

- The server either says ‘get lost’ or graciously grants the request

- If granted, the browser says ‘thank you’ and begins rendering the page

- If the browser needs more content to render the page, it sends another request

- This repeats until all requests have been received and the page is fully loaded

- You can now watch that video recap of last week’s Downton Abbey episode you’ve been patiently waiting to play

At its core, an HTTP request is a fancy way of saying “sending things back and forth” between a server and a browser.

Why is Reducing HTTP Requests Important?

As you can see from our example above, HTTP requests are a key component to displaying your site, but all that talking back and forth takes time. In fact, Yahoo has purported that 80% of a web page’s load time is spent downloading HTTP requests.

A web page needs to download information like images, stylesheets, and scripts in order to display properly. This information is retrieved via an HTTP request sent by a browser to your site’s servers. The more HTTP requests, the longer it takes your page to load. The longer a page takes to load, the greater the likelihood of users leaving your site, and the less likely the page will appear high in SERPs. That’s bad for business all around.

In fact, studies have shown a mere increase from 2 to 5 seconds in page load time can result in bounce rates soaring from 9% to 38%.

So, reducing HTTP requests is good for both SEO and user experience.

How Many HTTP Requests Should Web Pages Have?

You should strive to keep the number of HTTP requests under 50. If you can get requests below 25, you’re doing amazing.

By their nature, HTTP requests are not bad. Your site needs them to function and look good. But you probably don’t need as many HTTP requests as you have. Overall, your goal should be to make fewer HTTP requests without sacrificing user experience or page functionality.

Currently, the average number of requests per page is around 70. But we know you can do better than that.

A Note on Minimizing Round-Trip Times

Reducing the number of HTTP requests begins here. Literally.

Round-Trip Time (RTT) is the time it takes for a server to respond to an individual file request sent when someone visits your site. To load properly, files need to be requested individually. Doing so can take a lot of time. Reducing their number improves that time.

Some speed improvement guides count minimizing RTT as the be-all-end-all task. And there are some solutions (like CDNs) that can lower RTT without you needing to do much else. But while that may improve speed short-term, it’s not lowering your HTTP requests.

So if you’re interested in improving the overall efficiency and performance of your site (without bandaids), then continue reading! Below we’ll dive into the specifics of reducing HTTP requests (and thus minimizing RTT) with tasks like CSS Sprites, concatenation, and trimming redirects.

So, let’s get our hands dirty. (Not literally.)

1. Combine HTML, CSS, JavaScript Files

Combining files (aka “concatenation” if you’re a word nerd) works just like it sounds. If your site runs multiple CSS and JavaScript files, this solution combines them into one. Because requesting one file is a lot faster than requesting ten.

A site can have multiple CSS files and multiple JS files. Run a CSS test and JS test for your site to see how many you have. If you have more than one external CSS or JS file, you might try combining them into a single CSS or JS file.

Some WordPress plugins like WP Rocket, WP-Minify, or W3 Total Cache help you automatically concatenate. In other cases, you will need to use a third-party online tool or do it manually. Note, you can only combine files hosted on your site. If the file’s domain is something other than your own, you can’t combine it.

Be aware that combining files might not always be right for your site. Certain functions could stop working, or content may not load properly. Backup your site before making any changes. If anything seems off after combining, either undo the concatenation or locate the specific files causing the issue and refrain from combining them with the others.

Concatenation and HTTP/2

In our guide on improving page load speed, we discussed the benefits of HTTP/2, which is designed to transfer multiple small files simultaneously. Although combining CSS and JS files can still improve load performance in HTTP/2, instead of creating a single file, it’s most optimal to group them into several smaller bundles of related content. In other words, if you have ten JS files, instead of combining them into one file, group them into 3-4 based on how related they are to one another.

2. Prioritize File Placement

File placement plays a big role in request speed. As a best practice, CSS relating to design and interface should appear at the top of the page in the <head> section. These stylesheets are integral to showing a page properly and need to load as fast as possible.

JavaScript, on the other hand, should go at the bottom of the page, right above the </body> tag. This is because loading scripts severely delay rendering the page.

But before you go shuffling around your files, don’t forget to….

3. Defer Parsing of JavaScript

Not all JavaScript is created equal. Some JS is necessary to load a web page, and solely moving it to the bottom of the page is not enough. That’s where deferring JavaScript comes in.

If you defer the parsing (loading) of JS, it will ensure any render-blocking, non-critical JS will run only after the page has loaded, while critical JS still executes normally. There are plenty of plugins that can automatically do this for you like W3 Total Cache. Or you can defer JavaScript manually.

4. Fix Broken Links

Fixing broken links (404 errors) isn’t just good for improving page load time; it’s smart SEO all around. For every external link that exists, an HTTP request is sent. If the linked page exists, it will respond faster than one that doesn’t. So, having a link that directs visitors to a page that doesn’t exist keeps your site waiting longer, drains bandwidth, and wastes resources.

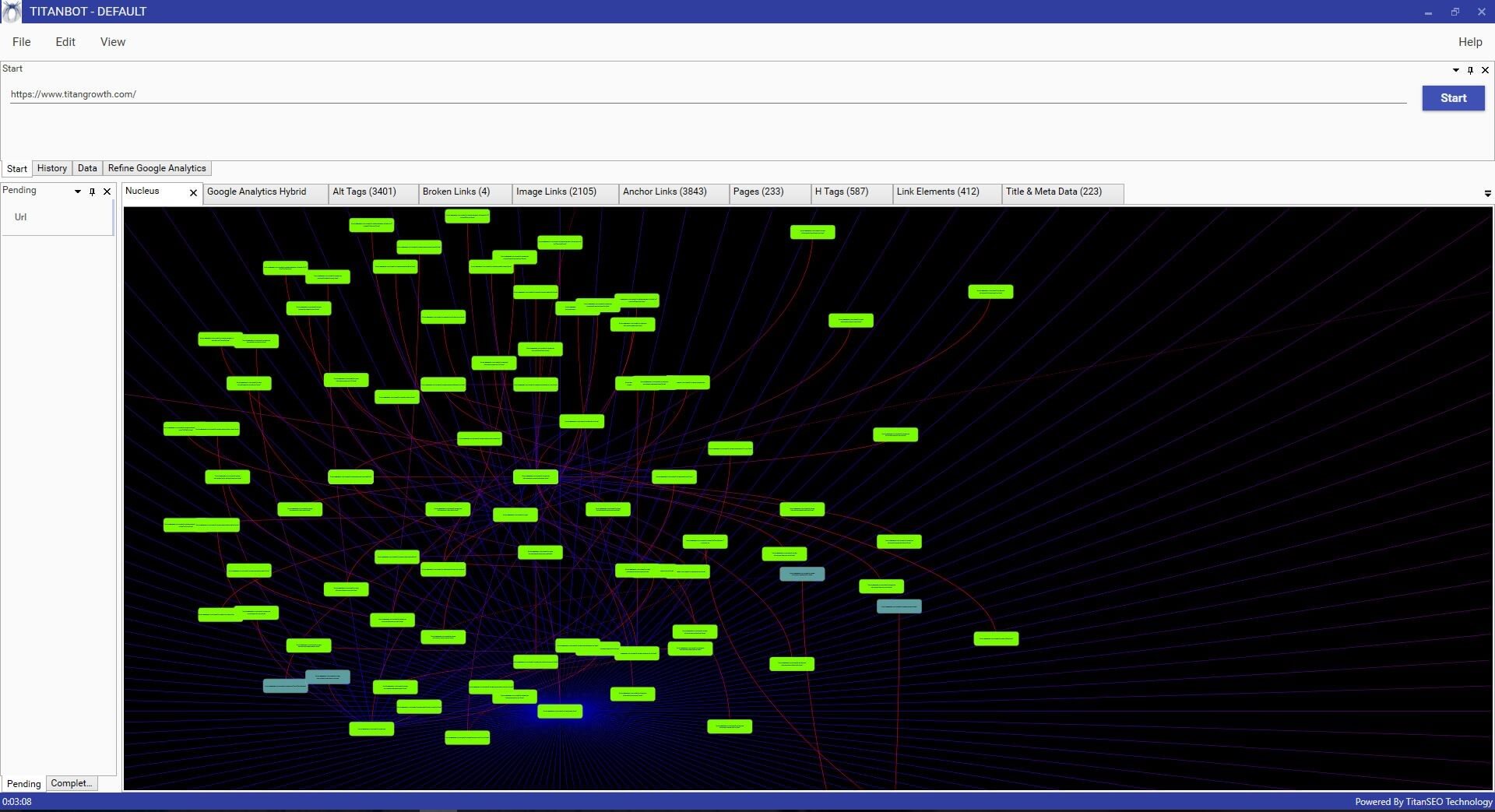

Our team leverages TitanBOT to find all broken links. If you are not currently working with our team, you can also find broken links using an online tool like Broken Link Checker.

5. Reduce Redirects

Having 301 (permanent) or 302 (temporary) redirects is definitely preferable to 404 errors (broken links), but they are still not ideal. In a perfect, speedy world, there would be no redirects. But that’s not really practical.

A TitanBOT crawl is a great way to identify 301 redirects. There are also additional tools, like httpstatus, available that will check status codes for a list of URLs. Run a scan and sort by “Status Code.” See if there are any 301s that are unnecessary. Look closely for redirect chains. These are redirects that point to other redirected pages. Redirect chains are the worst. If you must have a redirect, cut out the middleman and have only one link redirecting to the most recent version of a page.

Also, be sure to check your .htaccess or other server configuration files to make sure you don’t have any old legacy redirects. These can really add up and slow down your site if unchecked.

6. Use CSS Sprites

A CSS Sprite is one large image that represents all the images on your site. Kind of like a sitemap, but for images. It acts like a map containing the coordinates to each image used on your page. It includes icons, logos, photos, graphics, and so on. CSS is then used to position these images where you want them on the page.

It is faster for a browser to load one big image than a lot of smaller images. Why? You guessed it! Because doing so requires fewer requests.

SpriteMe or the aptly named Gift of Speed offers handy CSS Sprite generators.

7. Don’t Use @Import

Avoid using CSS @import to connect to your stylesheets. Instead, directly link to them using the <link> tag.

A CSS @import operates client-side, so each @import request adds another request to the main file request. That’s too many requests for that sentence, let alone for your site. A <link> tag allows browsers to download stylesheets in parallel, which is much simpler and faster. Besides, some older browsers don’t even support @import anymore, so it’s best to avoid them whenever possible.

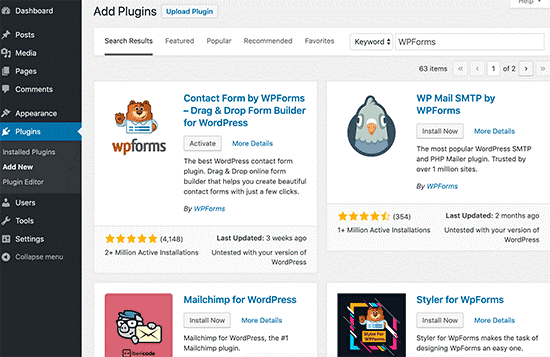

8. Reduce External Scripts

External scripts are code pulled from other third-party locations and are not hosted on your server. They include anything from commenting modules, analytics tools, external fonts like Google Fonts, social media boxes, and so on. For example, when you allow Google Analytics or Facebook Pixel to track your site, that adds an external script to it and with it an extra HTTP request.

![]()

Some external scripts are extremely useful and vital to your business, but some are just clutter. Find any that are unnecessary and eliminate them. Maybe you have an email signup pop-up on your site that you no longer want. Remove it, and you’ll remove an HTTP request. Most performance tools like GTmetrix and Google Pagespeed Insights will show you which external scripts are using the most resources.

9. Enable Keep-Alive

When someone visits your page, their browser sends messages to your server, asking permission to download page files. HTTP Keep-Alive opens a single connection between the two that allows downloading of multiple files without repeatedly asking permission. This saves on a lot of bandwidth.

To enable HTTP Keep-Alive copy and paste the following code into your .htaccess file (take backups first):

<IfModule mod_headers.c>

Header set Connection keep-alive

</IfModule>

10. Add Expire Headers

If you’ve ever run a performance test on Gtmetrix, there’s a good chance you got an ‘F’ for leveraging browser caching. That’s because you didn’t set expiry times for your files. (Don’t worry, we’ve all done it.)

A web server uses an Expires header to tell browsers how long to cache something when someone visits one of your pages their browser stores a version of it in their cache. (Unless they have caching disabled or are using a private window.) If they return to that page instead of requesting all the files again from your servers, the browser loads it from the cache. This is a lot faster. Adding Expire headers dictates this process, reducing the number of HTTP requests.

On WordPress, you can get a plugin to add Expire headers for you. Or better yet, avoid adding a new plugin, and leverage browser caching manually.

11. Reduce Plugins

Above, we’ve tried to provide both plugin and manual solutions to implementing tasks. That’s because while plugins make life easier, they don’t always make pages load faster.

Not only do plugins add to the size of your site as a whole, but they also generate HTTP requests. In some cases, a single plugin sends multiple requests. For example, Disqus is called on every page of your site, regardless if it’s being used on a page, which creates a lot of unnecessary requests.

Remove any WordPress plugins you don’t need or try to find lighter alternatives to ones you’re currently using. Page Performance Profiler is an easy way to analyze your plugins and see which are the most resource-heavy. (Just be sure to remove it once you’ve finished.)

Testing Your Efforts

How’s your website running now?

You can test to see total HTTP requests for your pages (and benchmark your reduction efforts) using tools like Gtmetrix and Pingdom. If you successfully implement the tasks above (even just a few), you’ll notice an immediate difference in your site speed. Best of all, if done right, user experience will go unaffected and your visitors will be none the wiser to all the tinkering you did under the hood. They’ll just appreciate how fast your site is loading.

Vroom vroom!